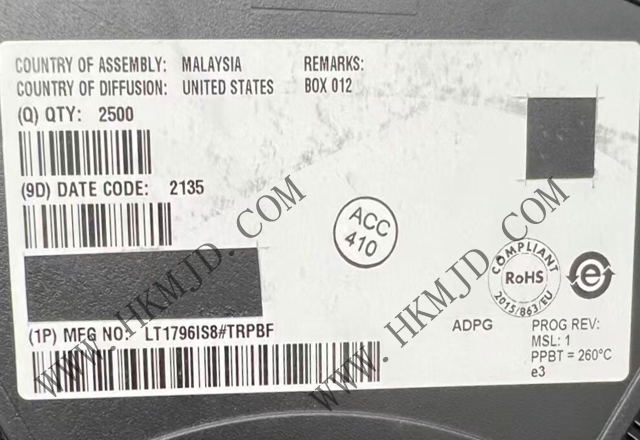

Welcome Here Shenzhen Mingjiada Electronics Co., Ltd.

sales@hkmjd.com

sales@hkmjd.com

Service Telephone:86-755-83294757

Latest Information

Latest Information Home

/Industry Information

/

Home

/Industry Information

/

Apple Launches New Robotic Obstacle Avoidance System to Better See Its Surroundings

According to foreign media reports, local time on 30 December, Apple announced the release of a new robot ARMOR. the new robot obstacle avoidance system by Apple and the United States Carnegie Mellon University (Carnegie Mellon University) cooperation…

According to foreign media reports, local time on 30 December, Apple announced the release of a new robot ARMOR. the new robot obstacle avoidance system by Apple and the United States Carnegie Mellon University (Carnegie Mellon University) cooperation research and development.

How the ARMOR system looks like

Currently, traditional humanoid robots typically rely on a centralised camera and lidar mounted on the head or torso for environmental awareness. While this approach is easy to integrate and provides a good field of view, it can be heavily occluded in the arm and hand regions.

Although some studies have attempted to integrate haptic sensing technology in robotic end-effector, such solutions are costly and difficult to be applied to robotic arms on a large scale. Meanwhile, how to effectively use haptic inputs in the framework of strategic learning remains a pressing issue.

Apple's ARMOR system, however, uses an integrated hardware and software design. On the hardware side, the system uses a centralised RGBD camera that captures all the details in dense frames at once. The research team also selected the SparkFun VL53L5CX time-of-flight (ToF) lidar as the base sensing unit. Sparse sensing through multiple sensors creates a ‘self-centred’ perception model.

The sensor measures 6.4 x 3.0 x 1.5 mm and provides depth images with a resolution of 8x8 at 15 Hz, a diagonal field of view of 63°, and a range of 4,000 mm. The team configured each arm of the robot with 20 such sensors, totalling 40 sensors, to build a distributed sensing network.

Each of the four sensors is connected to an XIAO ESP microcontroller, where the data is read via an I2C bus and transferred via USB to the robot's onboard computer (Jetson Xavier NX). Finally, the data is transmitted wirelessly to a Linux host computer equipped with an NVIDIA GeForce RTX 4090 GPU for processing to ensure that the system maintains a refresh rate of 15Hz.

On the software side, the research team developed a Transformer codec architecture based on ARMOR-Policy, which is similar to Action Chunked Transformer (ACT). Through imitation learning, the strategy is able to learn from collision-free demonstrations of human motion. To train the strategy, the team used 311,922 real human motion sequences (totaling about 86.6 hours) from the AMASS dataset, which covers a wide range of relevant human movements, such as manipulation, dance, and social behaviours.

The team remapped such human motion trajectories to the robot's joint configurations and generated compact obstacle regions around the trajectories to ensure that the trajectories did not collide with each other. In addition, the researchers used three strategies - obstacle avoidance motion, emergency stop, and collision-free motion - to generate training data.

The network architecture of ARMOR-Policy takes into account the existence of multiple effective solutions for motion planning. By introducing an additional encoder layer that infers the latent variable z, the policy is able to generate different motion trajectory candidate solutions by adjusting the variable z.

In the inference phase, the system will compute N candidate motion trajectories in parallel and select the best path by minimising the distance between the robot and the point cloud. The network inputs include the latent variable z, current and target joint positions (28-dimensional vectors) and depth images from 40 ToF lidar sensors. In addition, such depth images are processed by a modified single-channel ResNet18 backbone network to extract 512-dimensional features. The entire network architecture contains about 84 million parameters.

Compared to the traditional strategy of using four depth cameras mounted on the robot's head as well as externally (external sensing), the ARMOR system achieves a significant improvement in obstacle avoidance performance, with a 63.7% reduction in the number of collisions and a 78.7% increase in the success rate. Meanwhile, compared to cuRobo, a sampling-based motion planning expert system, ARMOR-Policy showed better performance with 31.6% fewer collisions, 16.9% higher success rate, and 26 times higher computational efficiency.

In addition, the research team verified the feasibility of the ARMOR system in real-world environments by deploying 28 ToF LiDARs on the Fourier GR-1 humanoid robot. The experimental results show that the system is capable of real-time obstacle avoidance trajectory updates at 15 times per second.

Time:2025-08-04

Time:2025-08-04

Time:2025-08-04

Time:2025-08-04

Contact Number:86-755-83294757

Enterprise QQ:1668527835/ 2850151598/ 2850151584/ 2850151585

Business Hours:9:00-18:00

E-mail:sales@hkmjd.com

Company Address:Room1239, Guoli building, Zhenzhong Road, Futian District, Shenzhen, Guangdong

CopyRight ©2022 Copyright belongs to Mingjiada Yue ICP Bei No. 05062024-12

Official QR Code

Links: